Synology

- Some of the Quirks

With Synology Hyper Backup

These are my thoughts on the quirks of Synology Hyper Backup that some people may find useful.

Some Background

I bought a Synology DS215j back in 2016 as it was a pain sharing and synching data between various PCs on my home network.

I initially set it up with 8TB of storage (4TB mirrored) and then upgraded to 16TB of storage (8TB mirrored) a few years later (the old story where you find stuff to store and quickly use up all the available storage space).

During that time I did backups on to external USB hard drives - folder synching basically.

That worked OK and wasn't too much of a hassle when there wasn't a lot of data but as the amount of data on the NAS grew it started to get a bit tedious.

A couple of years ago I upgraded to a DS920+ with 32TB of storage (16TB mirrored) as I was running out of space on the DS215j and while the DS215j was an OK thing the CPU and 512MB of RAM meant it started to struggle a bit.

So I bit the bullet and went to the DS920+ with an Intel Celeron J4125 4 core 2.0ghz CPU and 4GB of RAM and this does an excellent job as a network, web and FTP server.

However, the backup process using USB hard drives was getting time consuming so I decided to buy another Synology NAS to use to backup the DS920+.

I bought a used DS418j a month or two back and put in 14TB of storage to use for automated backups of the DS920+ (the data on the DS920+ at the moment is around 7.5TB).

I decided not to use the little DS215j as a backup machine as it only has 512MB of RAM and a Marvell Armada 375 dual core 800mhz CPU, whereas the DS418j has a Realtek RTD1293 dual core 1.4ghz CPU and 1GB of RAM so I thought that would be OK as a backup machine.

My Reasons For Choosing Hyper Backup

There were two main reasons I decided to give Hyper Backup a try.

Firstly, it's a Synology product and I've been happy with what Synology has to offer.

Secondly, Hyper Backup offered a full system backup of all system config as well as data, so if the DS920+ ever went legs up I could do a single restore process to restore the system config without re-configuring manually as well as recover all data in one go.

This fitted well as I also wanted to upgrade the Synology file system from ext4 to btrfs to use virtual machines and Hyper Backup was recommended by Synology as an easy option to perform this upgrade.

So I set up Hyper Backup on both the DS920+ and the DS418j, together with a full system backup schedule task and then pressed go.

How Did Hyper Backup Perform ?

The first backup of 7.5TB of data on the DS920+ to the DS418j took ~31 hours (with compression enabled).

I thought this was a bit over the top - but then, as it ran in the background, who gives a rats.

So I left it for 5 or 6 weeks and every week I'd check the backup job and see the "Backup completed successfully" message in the DS920+ and think, yeah, it's all good.

However, last weekend I decided to use the Synology backup explorer tool to see how that went browsing the full system backup on the DS418j and maybe extract a file or two as a test.

That's really when the fun began.

Hyper Backup Fun And Games - Part 1

Selecting the backup task in the DS920+ Hyper Backup window and then selecting the Version List I clicked on "Backup Explorer" on the latest backup version.

The Backup Explorer window opened and showed a greyed out "Volume 1" entry in the left panel (Volume 1 is the name of the DS920+ storage volume), no data in the right panel and a cryptic "No data" comment in the lower right of the backup explorer right panel.

What the hey - no data in the backup ?

OK, take a deep breath and give it some time to refresh the Backup Explorer window, as it's 7.5TB backup.

And (luckily), just over 30 minutes later, the right panel of Backup Explorer finally displayed all the folders that had been backed from the DS920+.

So that solved the "No data" issue - it's just that Backup Explorer is as slow as a wet week.

But 30 minutes - is it 1989 again and I'm using a 386 white box ?

I then clicked on the down pointer next to volume 1 entry in the left panel to expand the folder list - and about 30 minutes later the list populated.

So I clicked on one of the folders in the left panel - and about 40 minutes later the contents of the folder were displayed in the right panel.

This was all getting a bit tedious.

I selected one of the files and downloaded it, and that process took less than 5 minutes, so I suppose that was to be expected.

Bottom line - it took 1 hour and 45 minutes to view the backup in Backup Explorer and download 1 file from the backup.

Rather unimpressive is putting it politely.

So I decided to search the magic interweb and see if it could offer any advice on Hyper Backup.

The Magic Interweb And Hyper Backup

Generally, the magic interweb is singularly unimpressed with Hyper Backup, with the most common causes for complaint being the time factor, as in "Why does Hyper Backup take days / weeks to complete ?" or "Why do I see 'No data' in Backup Explorer ?".

I didn't find a single comment that had anything positive to say about Hyper Backup - although to be fair to Synology, the magic interweb is a whiner's paradise (and I mean a real pain-in-the-butt whiner's paradise).

The general opinion seems to be that Hyper Backup has been a bit on the slow side since day 1 and that Synology seems to be not especially interested in fixing whatever issue(s) may be causing this.

However, there was a common thread in the comments about Hyper Backup that suggested one of the factors that caused Hyper Backup to be so slow was having data compression enabled.

As I'd done my backup with data compression enabled I decided to delete the full system backup(s) I'd done with compression enabled and try it again with compression disabled.

And the results were . . .

Hyper Backup Fun And Games - Part 2

For comparison, I ran a full system backup with data compression disabled.

Without data compression, the full system backup of 7.5TB of data on the DS920+ to the DS418j took ~29 hours - 2 hours less than the time taken with compression enabled, so a slight improvement of ~6% faster.

So how did Backup Explorer perform viewing the backup and extracting a file ?

The Backup Explorer window opened and showed a greyed out "Volume 1" entry in the left panel and the right panel showed a "Loading".

9 minutes later the folder list appeared in the right panel.

Well, OK . . . 9 minutes is better than the 30 minutes it took the first time round when the backup data was compressed . . . comparatively.

Clicking on the down pointer next to volume 1 entry in the left panel to expand the folder list took 4.5 minutes for the list to populate - again, better than the 30 minutes from the first time round.

So I clicked on one of the folders in the left panel and about 2 minutes later the contents of the folder were displayed in the right panel - much better than the 40 minutes on the first time round.

I selected one of the files and downloaded it, and that process took less than a minute, so again an improvement.

But the whole process from starting Backup Explorer to downloading one file still took over 15 minutes.

15 minutes to retrieve 1 file is better than 105 minutes, but it's still unworkable.

What The Heck Is Going On With Hyper Backup ?

A backup is simply a copy of data (folders, files, whatever) that exist on the source machine.

Whether the backup is packaged/compressed/encrypted is neither here nor there.

From a usability prespective, I expect to be able to open/view/extract/recover files from the backup in a matter of seconds, not minutes or hours.

I back up local drives using Acronis True Image and I can open a 1TB backup using True Image almost instantly and extract a file almost instantly, so I don't accept the process of viewing a backup and recovering a file needs to be a time consuming process.

I can also view the source folders and files using Synology file station or Windows explorer almost instantly and I don't accept that any backup software is incapable of coming close to replicating this.

Acronis comes close to Windows explorer speed, so what's the deal with Synology Hyper Backup ?

I think there are three possible causes for Hyper Backup being so slow:

1. Either the Hyper Backup (or Backup Explorer) software is at fault, or

2. Hyper Backup packages the data in a way that causes it to be slow to explore/extract, or

3. Both of the above.

Some Troubleshooting

As I'm not a tech, evaluating the Hyper Backup software from a technical perspective to try and determine what issues it may have are beyond my pay grade, so I decided to focus on the aspect of the Hyper Backup data storage.

I think that what I found about the data structure goes some way to explaining why Synology Hyper Backup and Backup Explorer are so slow.

Rather naively, I thought that Hyper Backup would do one of two things:

* Simply replicate the existing source data structure (either with or without data compression or encryption) or

* Package a replication of the existing source data structure as above using a backup archive package format

approach that various backup software products use (such as the TIB archive Acronis True Image uses).

Wrong on both counts.

Synology does it in a rather unique and I suspect, labour intensive, way.

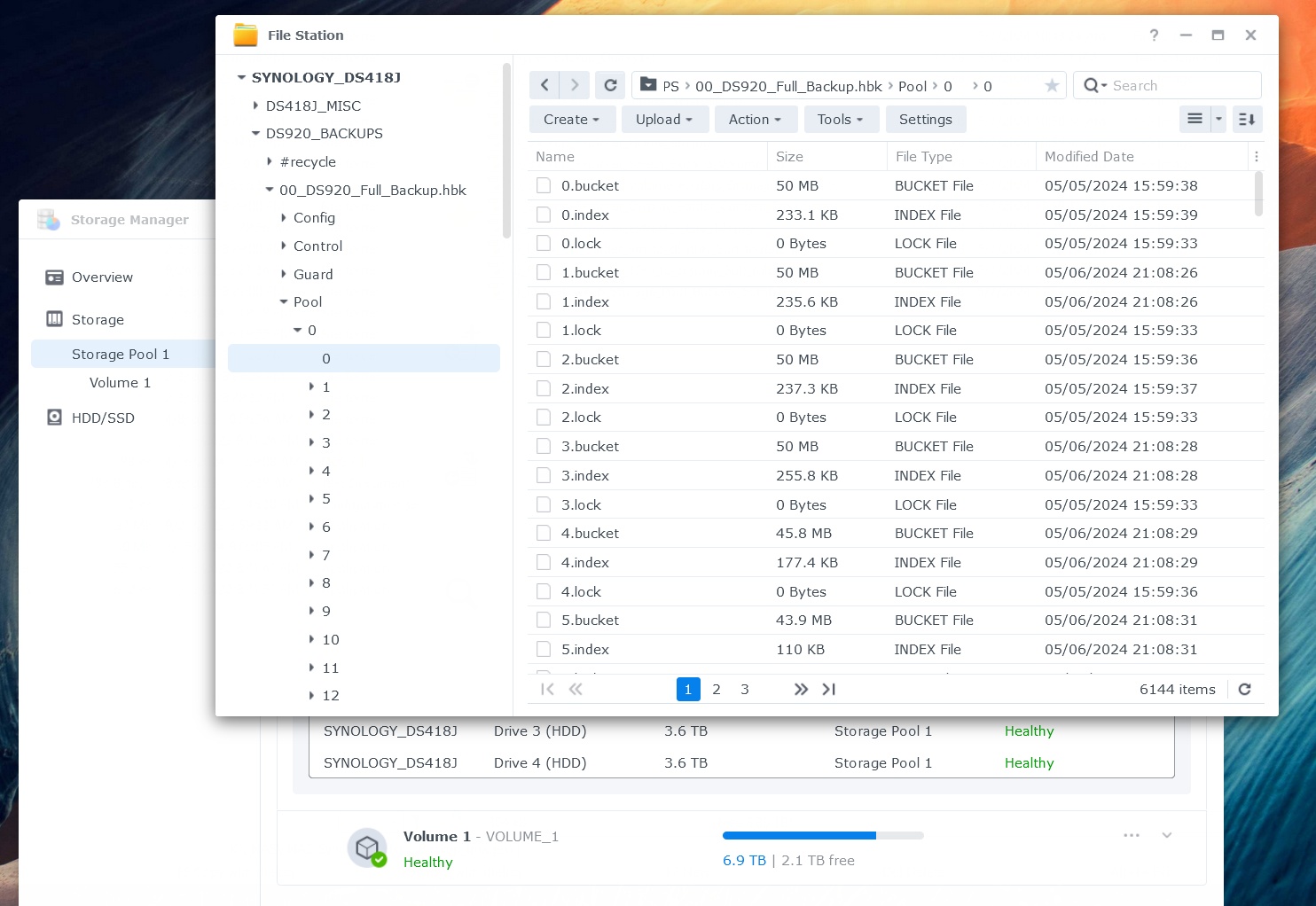

It uses storage "buckets" - and lots of them.

In my case for 7.5TB of data (1.7m files), there are roughly 138,000 data "buckets" of around 50MB each (the actual amount of data stored is closer to 6.9TB as Hyper Backup doesn't store duplicate data).

Here's a picture of one of the Hyper Backup folder with "buckets": (right click and select 'view in new window')

So . . . I'll take a guess and say that one reason Hyper Backup takes so long to back up 7.5TB and 1.7m files is that a fair percentage of that time is spent is slicing and dicing (and indexing and de-duplicating) 1.7m files into 50MB "buckets".

Conversely, I'd say one reason Backup Explorer is so slow is that it has to un-slice and un-dice these 50MB data "buckets" back into a representation of the original source data.

I'm not a rocket scientist and if someone can explain to me why the method Hyper Backup uses is a logical approach to storing backup data I'd really like to hear it, as the result in terms of real-world usability is absolutely piss poor.

A best case outcome of 15 minutes to retrieve 1 file ?

Pull the other one.

And From Here On ?

As a full sytem backup and full system recovery option Hyper Backup is no doubt useful, if you're prepared to accept that limited use case and the issue around the time involved for those operations.

However, my main requirement is usability and that isn't Hyper Backup's strong point - I don't accept a best case file recovery time of 15 minutes (although 30 years ago I would have been fine with that).

For file backup, I want a backup that's essentially a mirror of the source and one that I can easily access in Windows using Windows Explorer and recover a file.

I also prefer a backup that isn't in a proprietary format so that in the worst case I can access the backup NAS using any Windows PC or a MAC and recover files.

So I'll probably go with an easier option and use a file sync program I already have on Windows to sync NAS1 to NAS2 on a scheduled basis, pretty much as I do now with syncs to USB hard disks.

At least that way I can recover any files I need to from the backup instantly as it's a flat folder structure, uncompressed and unencrypted.

A pity Hyper Backup isn't as usable as Acronis True Image, but it is what it is.

I'll also evaluate the Synology Drive ShareSync application as Synology's blurb about it claims it's the bee's knees for "synchronization across Synology NAS devices" so that may fit quite well.

* Update - I've now tried Synology Drive ShareSync and my thoughts on that experience are included below.

Anyhoo . . . other than Hyper Backup, Synology is the duck's guts for what I need to do, so that's a consolation.

Hopefully someone will find the above information useful, perhaps in explaining why their Hyper Backup jobs (and recovery) take so long.

And just before I leave this topic, one other niggle about Hyper Backup - de-duplication.

Hyper Backup automatically de-duplicates the backup data, to save some space I assume. This seems to be an option that can't be toggled off, so that you get it as a matter of course.

I find that annoying for two reasons - firstly, the option should be made available for a user to choose whether or not they want to use it - and secondly, it's my data and represents my digital life over the last 30+ years and I'm well aware there are duplicate files in the data, but that's my choice to leave it that way, for various reasons.

I don't need a nanny called Hyper Backup to dictate what's best for me.

Kind regards,

Shotter_Nail

Synology Drive ShareSync - The Bee's Knees Or What ?

Quite acceptable, but with a quirk or two.

Synology Drive has to be installed to install and use ShareSync, so I installed Synology Drive and ShareSync.

Synology Drive allows remote web access to whatever folders you nominate, so that could prove useful - but as I already have external access to my network that isn't a pressing need. Nice enough GUI though.

As for ShareSync . . .

Based on my experience with the speed of HyperBackup and imagining I might save some time, I first copied all the data from the DS920 to the DS418 using a Windows file sync program before setting up ShareSync and nominating which folders I wanted to sync (basically all) and that process took a couple of days for 7.5TB.

Since the data was aleady on the DS418 (the backup NAS) I thought the first sync process would be a matter of minutes, or at the worst, a matter of hours.

Well . . . the ShareSync process has been running for 4 days now and I estimate about another day to go.

That is, having already copied the data to the DS418 has made no difference whatsoever to Synology and it's performing the complete sync process from scratch, as if no data exists on the DS418.

Synology does make a point of noting in the ShareSync configuration that either linking or re-linking NAS may cause the sync process to essentially start from scratch again and this is obviously what's happening in my case.

I'm not sure of the logic behind that, as there was an identical data set of each machine, but it is what it is.

On the upside, once the initial sync is done, the background sync process runs in real time and changes to a folder on the DS920 are (almost) instantly replicated on the DS418, so that's quite useful to have a near real-time backup 24/7 and probably worth the price of admission on it's own.

Just don't ever unlink the 2 NAS machines, or there'll be x days sync time if they're re-linked (syncing can be paused if needed for some reason without unlinking the 2 NAS machines).

So all in all, a fair enough product that provides a near real time sync across 2 NAS.

ShareSync also has the ability to retain multiple versions of synced files and allows collaborative access and update to the files on one or both of the NAS machines and various config options for syncing, but I'm not especially interested in the bells and whistles.

I'm doing simple A->B file syncing so ShareSync will do the job (providing I never unlink/relink the 2 NAS).

< Go Back